Custom Discover Integrations (Import API)

Use Custom Integrations for SaaS discovery

Custom Integrations provide you with an option to provide customer-specific data to SMP with the purpose of discovery, e.g. SaaS services or SaaS spend.

Reasons to use custom integrations instead of the provided out-of-the-box integrations might be:

- the data source is proprietary or an on-prem solution

- there is no existing out-of-the-box discover integration for the data source

- access to the data source cannot be granted due to security or compliance reasons

With custom integrations we provide a dedicated resource in our cloud infrastructure to ingest data.

Configuration required

Please be aware that using custom integrations requires implementation effort on customer side. The customer needs to establish the data extraction from the data source and the data transfer to SMP.

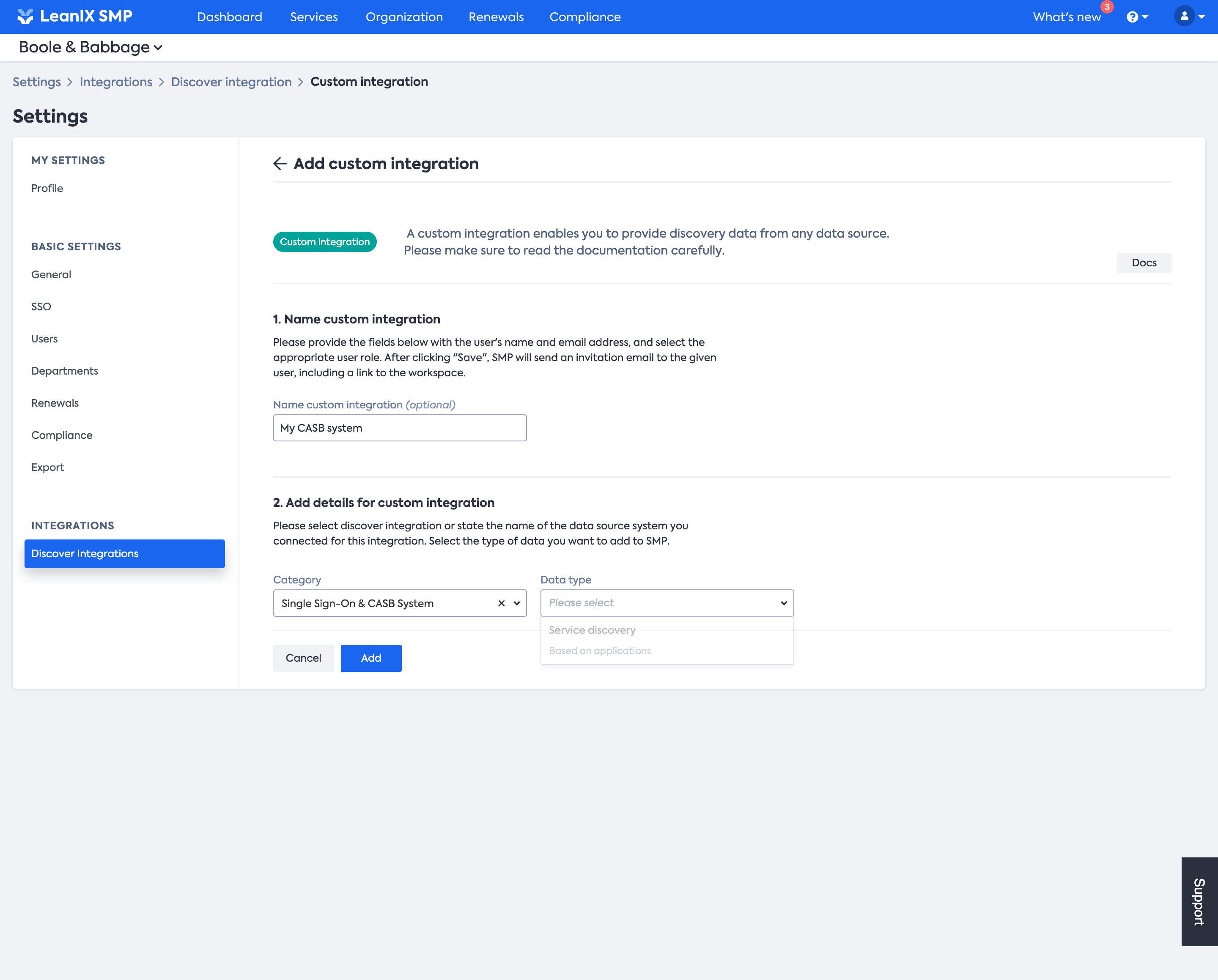

Create a Custom Integration

To enable custom integrations you can create a custom integration in Settings => Discover Integrations.

Click “Add custom integration” to add a new Discover Integration using Custom Integrations.

Add a name for the custom integration and select the applicable type of discovery. The type of discovery defines the ingestion schema.

Available discovery capabilities

- Single Sign-On & CASB System

- Service discovery (based on applications) - Used to discover new SaaS and add the services to your workspace

- Service and user account discovery (based on applications and users) (available soon) - Used to discover new SaaS and user accounts

- Financial System

- Service discovery (based on vendors) - Used to discover new SaaS and add the services to your workspace

- Service and spend discovery (based on vendors and invoices) - Used to discover new SaaS, and how much is spent on it

- Contract Systems

- Service discovery (based on contracts) - Used to discover new SaaS and add the services to your workspace

- Service and contract discovery (based on contracts) - Used to discover new SaaS, and related contracts

More details on Discovery capabilities and data sources can be found here.

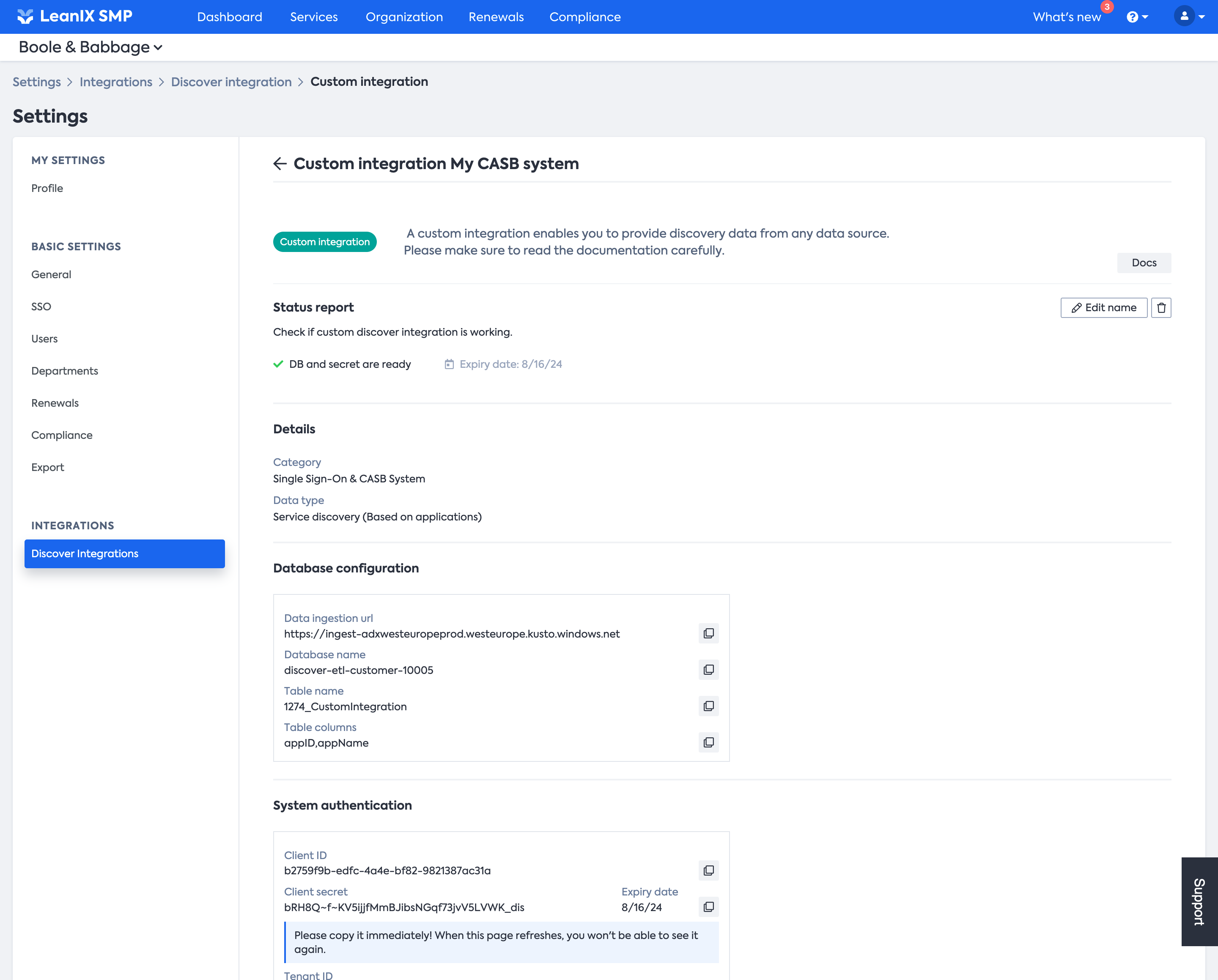

Once saved the new custom integration is available.

Authentication details

Make sure to copy the authentication details immediately and store them safely - once displayed you cannot access them again.

Introduction

The technology used to ingest data is based on Azure Data Explorer.

Azure Data Explorer is a fast, fully managed data analytics service for real-time analysis of large volumes of data streaming from applications, websites, IoT devices, and more. We use Azure Data Explorer to collect, store, and analyze data provided by customers.

For each customer that uses custom integrations, we provide an individual database in the corresponding region where the customer's SMP workspace resides. For each integration, we create a separate table.

Importing data

Data can be ingested in multiple ways, please refer to the official ADX documentation for more details: Azure Data Explorer data ingestion overview

Data formatting

Please ensure that the following format requirements are met for the respective data types:

- invoiceDate:

- US: YYYY-MM-DDThh:mm:ssZ

- German: JJJJ-MM-TT"T"hh:mm:ss"Z"

- amount

- no thousands separator, decimals separated with a period (e.g. 12345.60)

Example

import io

import pprint

from azure.kusto.data import (

KustoConnectionStringBuilder,

DataFormat,

)

from azure.kusto.ingest import (

QueuedIngestClient,

IngestionProperties,

StreamDescriptor,

FileDescriptor,

BlobDescriptor,

ReportLevel,

)

##################################################################

## AUTH ##

##################################################################

cluster = "<insert here the data ingestion URL>"

client_id = "<insert here your client id>"

client_secret = "<insert here your client secret>"

tenant_id = "<insert here your tenant id>"

kcsb = KustoConnectionStringBuilder.with_aad_application_key_authentication(cluster, client_id, client_secret, tenant_id)

client = QueuedIngestClient(kcsb)

##################################################################

## INGESTION ##

##################################################################

database_name = "<insert here the database name>"

table_name = "<insert here the table name>"

ingestion_props = IngestionProperties(

database=database_name,

table=table_name,

data_format=DataFormat.CSV,

# required if data includes header row

additional_properties={"ignoreFirstRecord": True},

# in case status update is needed

report_level=ReportLevel.FailuresAndSuccesses,

)

# ingest from stream

data = "1, foo\n2, bar"

stream_descriptor = StreamDescriptor(io.StringIO(data))

result = client.ingest_from_stream(stream_descriptor, ingestion_properties=ingestion_props)

# ingest from file

file_descriptor = FileDescriptor("{filename}.csv")

result = client.ingest_from_file(file_descriptor, ingestion_properties=ingestion_props)

# ingest from blob

blob_descriptor = BlobDescriptor("https://{path_to_blob}.csv.gz")

result = client.ingest_from_blob(blob_descriptor, ingestion_properties=ingestion_props)

# ingest from dataframe

import pandas

fields = ["id", "name"]

rows = [[1, "foo"], [2, "bar"]]

df = pandas.DataFrame(data=rows, columns=fields)

result = client.ingest_from_dataframe(df, ingestion_properties=ingestion_props)

# Inspect the result for useful information, such as source_id and blob_url

pprint.pprint(result)

##################################################################

## INGESTION STATUS ##

##################################################################

# if status updates are required, something like this can be done

import time

from azure.kusto.ingest.status import KustoIngestStatusQueues

qs = KustoIngestStatusQueues(client)

MAX_BACKOFF = 180

backoff = 1

while True:

################### NOTICE ####################

# in order to get success status updates,

# make sure ingestion properties set the

# reportLevel=ReportLevel.FailuresAndSuccesses.

if qs.success.is_empty() and qs.failure.is_empty():

time.sleep(backoff)

backoff = min(backoff * 2, MAX_BACKOFF)

print("No new messages. backing off for {} seconds".format(backoff))

continue

backoff = 1

success_messages = qs.success.pop(10)

failure_messages = qs.failure.pop(10)

pprint.pprint("SUCCESS : {}".format(success_messages))

pprint.pprint("FAILURE : {}".format(failure_messages))

Status

✅ Ready

Infrastructure is provisioned and waiting for client data.

✅ Active

We are receiving and processing new data.

⚠️ Warning

No data has been received in the past 24 hours OR

Credentials are about to expire within the next 3 months.

❌ Failed

Credentials have expired and have to be rotated OR

Something went wrong while processing data. For more information please contact support.

Integration Status

The integration status is updated periodically every 10 minutes, meaning that data ingestion is not immediately reflected inside the workspace.

Updated 10 months ago